By Jared Dodds

The Nov. 4 election looked worrying for many at the beginning, but did it stay that way?

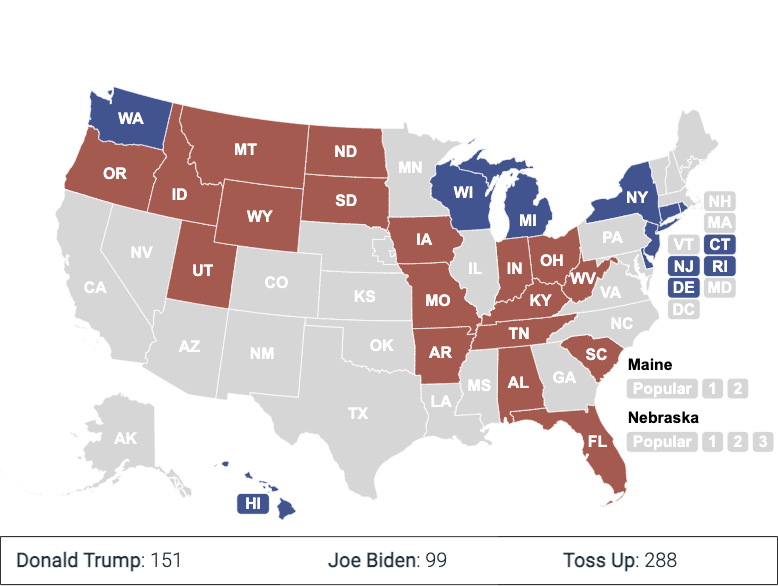

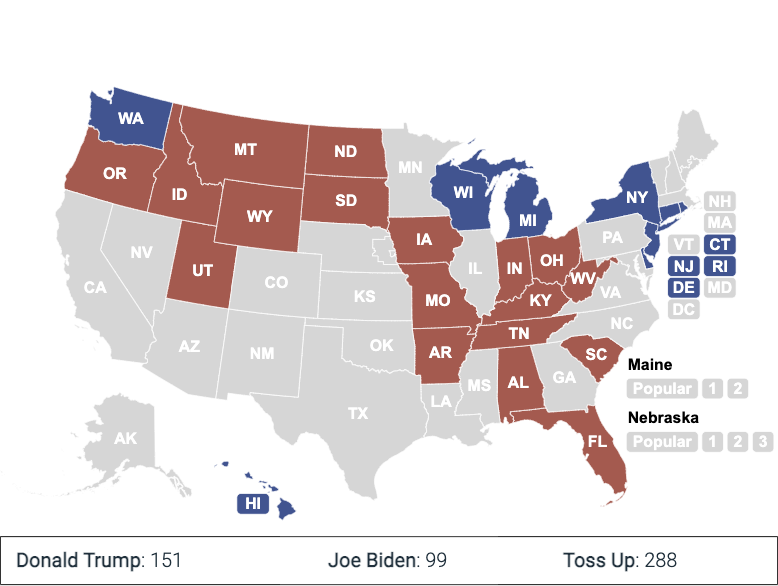

Nov. 4 marked another puzzling election for experts across the world, as Donald Trump looked poised to do the impossible again and claim a second term in office. The professionals sweat out the final verdict, which as we now know, named Joe Biden as the 46th president of the United States. Still, some are calling the death knell for polling, all while facts point in a different direction.

Before getting into specifics it is important to answer some commonly asked questions about the polling industry. For starters, the practice is more widely used for public opinion and market research than in politics. It’s also important to note that despite what some may think polling is a science. Political pollsters will use probability theory to collect what’s called a representative sample of the population and use their responses to gauge what the electorate thinks on a particular issue.

The most effective way to acquire this sample through random-number dialed phone polls, but due to the prohibitive cost, they are far from the norm. Instead, many have turned to the internet to cast a wider net for less; the problem with this is some feel you cannot produce a truly random sample this way, leading to potentially misleading data.

Cristine de Clercy, a professor, specializing in Canadian politics at the University of Western Ontario, says reporters have to not only get better at understanding what the data says but also identify if it is strong in the first place.

“I would say there is good data, and there is bad data,” de Clercy says. “There’s data that is collected responsibly with a somewhat informed understanding of how to collect it. That’s trustworthy. And then there’s some data that just wasn’t.

“This is getting worse with online polling and online surveys, and I say this as someone who does online polling, but the problematical data is ever more present,” she says.

So was there bad data in the 2020 election? The answer isn’t so black and white. The first question is what exactly did they get wrong, and the most egregious answer is Florida. 538, a popular website that aggregates polling data had Biden winning The Sunshine State by two and a half points. One of the polls cited was conducted by Emerson College, an organization 538 gives an A- rating when it comes to accuracy. The poll, conducted just five days before in-person voting began, had Biden winning the state by six points.

Trump won by over three.

David Coletto, the CEO of Abacus Data, says the mistake came from an underrepresentation of conservative voters in polling, a problem that is also present in Canada.

“Just how polarized the U.S. is. I don’t think Canada’s, I don’t think we’re there yet,” Coletto, whose company celebrated its 10-year-anniversary last July, says. “But there is still a demographic I think is not accessible to pollsters.”

P.J. Fournier, owner and operator of 338.com, an aggregate website similar to 538 but for Canadian politics, took it a step further, saying it was not just conservatives that polling might have a problem reaching.

“A lot of people say conservatives are underestimated, and that’s not always true,” Fournier says, citing the overestimation of Ontario Tory votes in the 2019 Federal election. “What seems to be true is that the more extreme elements on the political spectrum, be it on the right or the left, are underestimated.”

Robert Benzie, the Queen’s Park bureau chief for The Toronto Star, says divisive candidates also have a massive impact on the accuracy of polls. Benzie cites this exact effect when trying to parse out what happened in Florida.

“With some types of political candidates people will not tell the pollsters that they’re voting for them, and I think that’s what happened in Florida with Trump,” he says.

Language barriers can lead to missing vast swaths of the population. It happened in Florida with the Cuban vote coming out strong for Trump, and it even has the potential to cause problems in Canada.

“We’re in B.C.., and we aren’t, and frankly we don’t offer a survey in Mandarin or South Asian languages, and those communities are so concentrated that we might miss something,” Coletto says.

All of these problems led to a surprising result in Florida and gave plenty of ammunition to people who wanted to take a shot. But Florida is just one state, and while 538 may have gotten it and North Carolina wrong, they correctly predicted the results of every other race.

“The tendency for people and the media is to always report on polls when they miss.”

-David Coletto

All of this means the issue may not have been the information at large, but how it was delivered by journalists and interpreted by the public. Coletto says the number one thing he thinks people don’t recognize about polling is how remarkable it is that they can use something, primarily a science, to predict a largely unpredictable thing, human behaviour, and thinks journalists can do a better job at reporting that fact.

“The tendency for people and I think for the media sometimes, is to always report on polls when they miss, and not the times they actually do an outstanding job of capturing the mood of the country or how people are feeling on a particular issue,” he says.

But the media does not merely rely on polls but also on aggregate sites like 538 and 338. Fournier is a writer for Maclean’s magazine, sharing his analysis of the polls he aggregates, and 538 was acquired by ABC in 2018. This has led to a debate as to whether we should be relying on a service that doesn’t actually have a foothold in science.

“Mathematicians sometimes say aggregating polls is not a real statistical method, you should not do that,” he says. “They have a point from a mathematical point of view if you take two polls using different methods, different samples, different field days that you should not average them out.”

But Fournier goes on to say the value of aggregation lies not in theory but in its results.

“I’m a scientist, and what we do, we make approximations of nature,” he says. “And so even though by the book, mathematically, it’s not quite what we should do, if it works 90 per cent of the time, over an extended period of time, it cannot be useless.”

De Clercy shares Fournier’s opinion as long as the initial polls aggregators are using are sound. “I would never argue that a site like 538 or the Canadian version 338, they should never be shut down because good data is always more information,” she says.

The problem arises when people with bad data use these websites’ outcomes to justify their own, all based on getting the same result.

Benzie says while he may look at these websites with a passing interest, he would not use them as content to produce a story.

Mirroring the political system many pollsters try to understand, there is division. Both sides of the aisle are adamant they are right, even as the data points in favour of pollsters. Still, there is always room to improve, and, thankfully, always another election to do just that.