The Lessons in Sports Illustrated’s Artificial Intelligence Misuse

By Andre La Rosa-Rodriguez

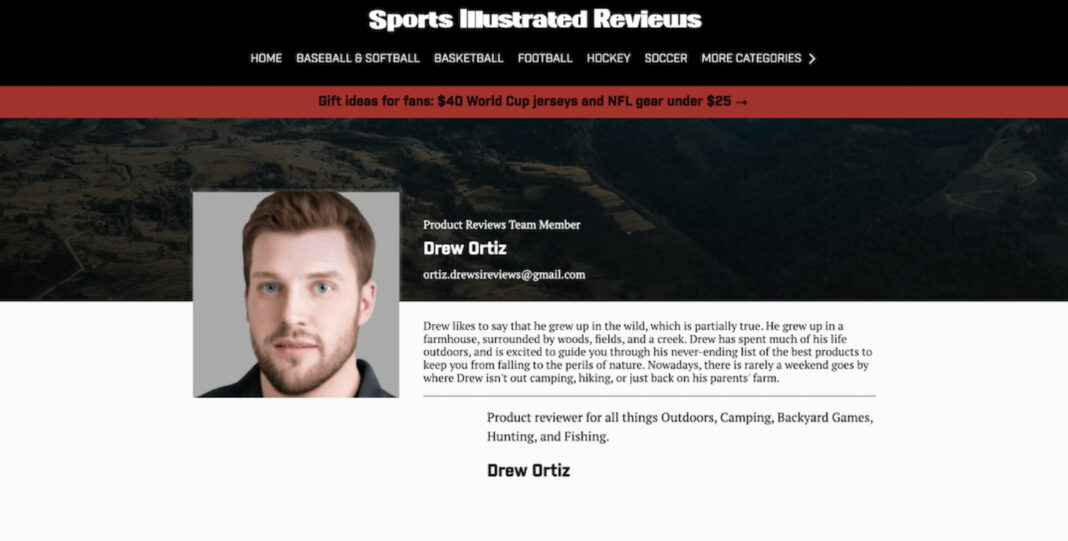

Drew Ortiz has written several outdoor activity review articles for Sports Illustrated. When he isn’t writing, he spends his time hiking, camping, or going back to his parents’ farmhouse where he grew up.

Ortiz is not a real person. He was one of several artificial intelligence (AI) writers that was producing content at Sports Illustrated. Tech-media company Futurism reported the findings in November and traced Ortiz’s author profile headshot to an AI-generating image website.

Sports Illustrated management company The Arena Group said in a statement shortly after the article was published that the reviews were created by AdVon Commerce, a third-party company, who “assured them” the content was “written and edited by humans.” The articles and profiles have been deleted from their website.

Arena Group confirmed they had ended the partnership and said the Futurism article may not be entirely accurate upon initial investigations.

Sports Illustrated did not respond to an email request for comment.

Jeffrey Dvorkin, a senior fellow at the University of Toronto and former news manager at CBC and National Public Radio (NPR), said this is a “further deterioration” of the public’s trust.

“What this did is confirm the worst suspicions in many people’s minds that none of it is really trustworthy,” he said. “It’s a disservice to all media and especially to mainstream media who have been trying desperately to convince the public that they are providing information that is trustworthy.”

“I’m sort of glad it happened because it kind of puts the rest of us on notice,” Dvorkin said.

He highlighted that if Sports Illustrated wants to regain the trust of their readers, they must admit to their mistake and “start” by hiring a public editor who will listen to the needs of their audience. This was his role for over six years at NPR and he said it is one of the toughest jobs in journalism. Dvorkin highlighted that in his last year he received 82,000 emails and that he would “answer as many as (he) could.”

“I got to know the NPR audience really well and they said ‘tell us what you’re doing and why you’re doing it.’ They wanted access to the process,” he said.

The Arena Group CEO Ross Levinsohn was fired on December 11. The company did not comment on any questions tying the layoff decision to the allegations surrounding AI-generated articles.

I’m sort of glad it happened because it kind of puts the rest of us on notice.

Jeffrey Dvorkin

A 2023 AI and Media Ethics report by Reporters Without Borders has highlighted transparency as one of several ethical principles in using artificial intelligence in the industry. The document said that while there is a “global convergence” surrounding the principles in using AI, there is still a divide in their importance.

A World Association of News Publishers survey completed in May found that only 20 per cent of newsrooms have guidelines in place for using generative AI. But an additional poll said almost half are already working with these tools. The guidelines that have been implemented in several media companies include being open about whether the published content has been produced by a human or an AI.

David Caswell, founder of the AI innovation consultancy StoryFlow and former executive project manager at BBC News Labs, said the industry has become more aware of artificial intelligence.

“The entire industry went through this phase in 2023 where they woke up to AI,” he said. “Despite the obvious threats that are facing journalism from generative AI, there really is an opening up of the capacity to experiment and innovate using these tools.”

Caswell was hooked on the integrations of AI in the media industry around 2010 during his time at Yahoo. He was tasked with revamping the “news personalization engine” with the “machine-learning” processes that were becoming available and began learning the foundational characteristics of the news.

He said being a part of those technological innovations was the reason why he joined the BBC in 2018.

“You needed something like BBC News Labs — £7 million a year, 20 people, a bunch of engineers. You needed that to do innovations. A lot of newsrooms couldn’t afford that,” Caswell said. “Now you’ve got single individuals in their evenings and weekends who are doing equivalent projects to what news labs would have done three or four years ago with three engineers, a designer.”

He noted that this “phase” is almost over. Caswell said the ongoing situation with Sports Illustrated is a “little educational” moment but in the “grand scheme of things” is not an “interesting event” in the industry.

“What’s happened here is plain, old-fashioned fraud or misrepresentation,” he said. “There’s going to be more of these. People who are incompetent or are a little unethical or people whose backs are to the wall in terms of revenue for their companies are going to make poor choices.”

A 2023 study found that there was an increased level of mistrust when an article was labelled as being AI-generated. However, the difference in trust-level with articles that were written by real people was minimal.

Caswell said that while the news industry may be “up-and-arms” about what’s happened at Sports Illustrated, it remains to be officially seen from news consumers. However, he noted that trust “(is) hard to get and easy to lose” and AI integration must be done “slowly, carefully, systematically and thoughtfully.”

“If something bad were to happen inadvertently at a big trusted brand, the cost can be quite severe,” he said. “That’s on the minds of people, as it should be. It’s a very significant risk.”

The Arena Group announced in February that they would be partnering with two AI firms to “speed and broaden” the workflow.

“While AI will never replace journalism, reporting or crafting and editing a story, rapidly improving AI technologies can create enterprise value for our brands and patterns,” former Arena Group CEO Ross Levinsohn said.

Caswell said the next phase of AI integration will involve the application of these tools in “day-to-day publishing workflows” and that in the long-term, up to eight years from now, there will be large structural changes within the industry as AI functionalities become “too great.”

The entire industry went through this phase in 2023 where they woke up to AI.

David Caswell

He said there are already “small signs” of where AI is headed. He referenced AI summarization tools and text adaptations in apps like Artifact. But Caswell said there will be areas that AI will not be able to do well.

“Even in extreme cases, editorial judgement is what remains,” he said. “It’s their willingness and ability to make discerning decisions about information. Is it true, is it representative, is it bringing the values of journalism to a specific piece of news?”

Seventeen employees were laid off from Sports Illustrated two weeks after the Arena Group partnership announcement and 12 more were hired amid “structural” changes within the company. Arena Group clarified in a statement that this was not an attempt to replace writers.

Dvorkin said that the pressures in management are growing with AI integration and that his time in those roles showed him that it’s a “balancing act” between the public, bosses, and shareholders.

“Before we let AI write the weather, traffic and crime stories which may not be a bad limit to what AI can do, it all needs to be supervised by a human being,” he said. “That’s going to be a big challenge for management which is under a lot of pressure now to minimize their expenses and maximize their profits.”

However, Dvorkin said that regardless of the changes the journalism industry must remember to prioritize their audience.

“We have to get back to the idea that we don’t need the technology to do journalism,” he said. “The danger of AI is that it will give us the illusion of making things easier but in fact, we’ll be failing in our mission to serve the public as citizens first and consumers of information second.”